Table of Contents

Scope/Description

- This guide will detail the process of adding OSD nodes to an existing cluster running Octopus 15.2.13. The process can be completed without taking the cluster out of production.

Prerequisites

- An existing Ceph cluster

- Additional OSD node(s) to add

- The OSD node(s) have same version of Ceph installed

- Network configured on new OSD node(s)

- Inventory file for the new OSD node(s) configured in /usr/share/ceph-ansible/host_vars/

Steps

Check Ceph Version on all Nodes

- First you should check to ensure all nodes are running the same version of ceph. Use the following command to check:

CentOS command: rpm -qa | grep ceph Ubuntu Command: apt list --installed | grep ceph

Set Maintenance Flags

- Set the flags for norecover, nobackfill and norebalance.

ceph osd set norebalance ceph osd set noout ceph osd set norecover

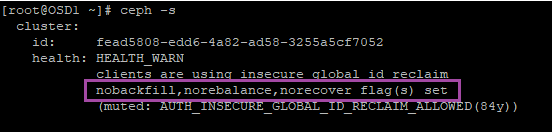

- Make sure the cluster the flags were set:

ceph -s

Add New Nodes to Host File

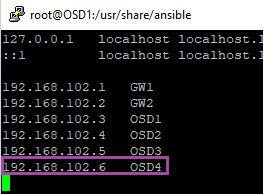

- Add the IP’s of the new OSD’s to the /etc/hosts file. For this example OSD4 is being added to the cluster.

vim /etc/hosts

- Add passwordless ssh access to the new node(s)

ssh-copy-id root@OSD4

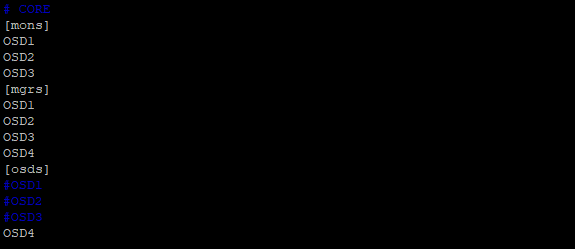

- Now add the new OSD to the hosts file. Add the new OSD to the “osds” section only, and comment out the pre-existing OSD nodes.

vim /usr/share/ceph-ansible/hosts

- Now run the following command to ensure the server can ping the new OSD(s), ensure the only OSD(s) being pinged are the ones being added to the cluster.

cd /usr/share/ceph-ansible ansible -i hosts -m ping osds

Run the Device-aliasing Playbook to generate the host.yml file for the new OSD Nodes

- Once you confirm the new OSD’s can be ping you can run the core playbook to add it to the cluster.

cd /usr/share/ceph-ansible ansible-playbook -i hosts device-alias.yml

- This will then create a .yml file in the /usr/share/ceph-ansible/host_vars/ directory

- you can cat these files to make sure it found all of your OSD drives and that if you have journal SSDs its not using them as storage drives, if the SSD journal drives are in that file simple delete their line or comment them out

Run the Core Playbook to add new OSD Nodes

cd /usr/share/ceph-ansible ansible-playbook -i hosts core.yml --limit osds

- When the playbook is complete the new nodes should be added to the cluster. Run “ceph -s” to ensure it has been added to the cluster.

Unset Maintenance Flags

- Make sure to unset the flags previously set to start the backfill process.

ceph osd unset noout ceph osd unset norecover ceph osd unset norebalance

- See the backfill/recovery guide to edit backfill settings to speed up/slow down backfill process.

Verification

- If you are to run “ceph -s” you should see the new total of OSDs includes the additional nodes added to the cluster.

Troubleshooting

- Ensure that the nodes are all running the same Ceph Version, and if need be do any minor updates to the existing Ceph Packages on the cluster so they will be in line with the new nodes added.

- Ensure that every single disk has been added to the cluster, for example if we had 15 total OSD disks before expansion, and the new node consisted of an extra 5 drives, we should see 20 total OSD disks in the cluster.

Views: 3310