Scope/Description

- This article will walk you through taking a Ceph node offline safely and then online it and bring the cluster back safely. The flags can be set in the event a node requires a reboot for an update or general maintenance. They will prevent the cluster from marking OSDs as out, thus stopping any rebalancing from occuring. This is beneficial in the event a node will be down for a short period of time. If a node is going to be out of the cluster for a longer period of time, it would be best to not set the flags to let the cluster heal.

Prerequisites

- Ceph Cluster.

- SSH Access to a Ceph Node.

Steps

Setting Maintenance Options

- SSH into the node you want to take down

- Run these 3 commands to set flags on the cluster to prepare for offlining a node.

root@osd1:~# ceph osd set noout root@osd1:~# ceph osd set norebalance root@osd1:~# ceph osd set norecover

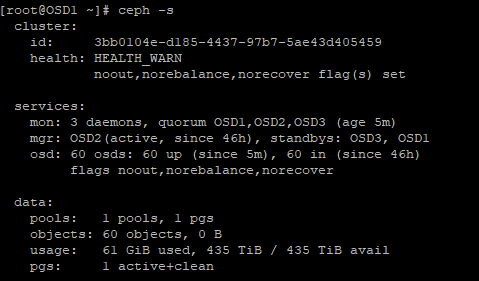

- Run ceph -s to see the cluster is in a warning state and that the 3 flags have been set.

root@osd1:~# ceph -s

- Now that the flags are set, it is safe to reboot/shutdown the node.

root@osd1:~# shutdown now root@osd1:~# reboot

Disabling Maintenance Options

- Once the system is back up and running and joined to the cluster unset the 3 flags we previously set.

root@osd1:~# ceph osd unset noout root@osd1:~# ceph osd unset norebalance root@osd1:~# ceph osd unset norecover

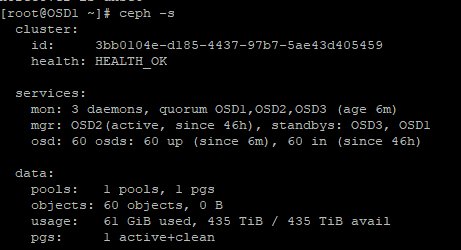

- Running ceph -s again to show a healthy state and to confirm the flags are unset.

root@osd1:~# ceph -s

- From this point, if you need to offline additional nodes, wait until the cluster goes back to HEALTH_OK prior to starting this process over again, beginning with once again setting the no out, no recover, no rebalance flags.

Verification

- A ceph -s shows a healthy state and shows all nodes online.

Troubleshooting

- Ensure you are on a Ceph node that has permission to do Ceph commands.

- If you are receiving a slow OPS error run the following on the node having the error

systemctl restart ceph-mon@hostname

- In the event the OSDs do not come back up on reboot, they can be activated with the following c0mmands.

ceph-volume lvm activate –all

Views: 4691