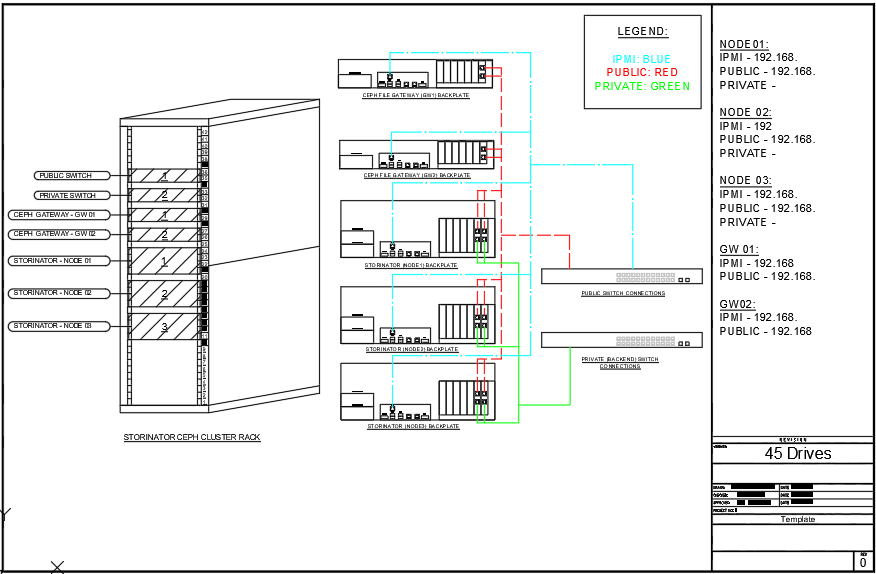

Scope/Description

This article outlines a network diagram/explanation for a typical 3 node cluster with 2 gateway nodes.

High Level Services and Network Diagram

Explanation of Services

Ceph is a license free, open source storage platform that ties together multiple storage servers

to provide interfaces for object, block and file-level storage in a single, horizontally scalable

storage cluster, with no single point of failure.

Ceph clusters consist of several different types of services which will be explained below:

Ceph Administrator Node

This type of node is where ansible will be configured and run from. They provide the following

functions:

● Centralized storage cluster management

● Ceph configuration files and keys

● Optionally, local repositories for installing Ceph on nodes that cannot access the Internet

Monitor Nodes

Each monitor node runs the monitor daemon ( ceph-mon ), which maintains a master copy of

the cluster map. The cluster map includes the cluster topology. A client connecting to the Ceph

cluster retrieves the current copy of the cluster map from the monitor which enables the client

to read from and write data to the cluster.

It’s Important to note that Ceph can run with one monitor; however, it is highly suggested to

have three monitors to ensure high availability.

OSD Nodes

Each Object Storage Device (OSD) node runs the Ceph OSD daemon ( ceph-osd ), which interacts

with logical disks attached to the node. Simply put, an OSD node is a server, and an OSD itself is

an HDD or SSD inside the server. Ceph stores data on these OSDs. Ceph can run with very few

OSD nodes, where the minimum is three , but production clusters realize better performance

beginning at modest scales, for example 5 OSD nodes in a storage cluster. Ideally, a Ceph

cluster has multiple OSD nodes, allowing isolated failure domains by creating the CRUSH map.

Manager Nodes

Each Manager node runs the MGR daemon ( ceph-mgr ), which maintains detailed information

about placement groups, process metadata and host metadata in lieu of the Ceph

Monitor— significantly improving performance at scale. The Ceph Manager handles execution

of many of the read-only Ceph CLI queries, such as placement group statistics. The Ceph

Manager also provides the RESTful monitoring APIs. The manager node is also responsible for

dashboard hosting, giving the user real time metrics, as well as the capability to create new

pools, exports, etc

MDS Nodes

Each Metadata Server (MDS) node runs the MDS daemon ( ceph-mds ), which manages

metadata related to files stored on the Ceph File System (CephFS). The MDS daemon also

coordinates access to the shared cluster. The MDS daemon maintains a cache of CephFS

metadata in system memory to accelerate IO performance. This cache size can be grown or

shrunk based on workload, allowing linearly scaling of performance as data grows. The service

is required for CephFS to function.

Object Gateway Nodes

Ceph Object Gateway node runs the Ceph RADOS Gateway daemon ( ceph-radosgw ), and is an

object storage interface built on top of librados to provide applications with a RESTful gateway

to Ceph Storage Clusters. The Ceph Object Gateway supports two interfaces:

S3 – Provides object storage functionality with an interface that is compatible with a

large subset of the Amazon S3 RESTful API.

Swift – Provides object storage functionality with an interface that is compatible with a

large subset of the OpenStack Swift API.

Recommended Network Configuration

45Drives Recommends a 10Gigabit Backbone for all Networks

Ceph should be deployed on 2 separate Networks.

- Public Network

- Cluster Network

Public Network

- The public network handles the client side traffic. This is the network that your clients access information on.

- The Monitor, Manager, and metadata Services as well as Ceph Clients communicate on this network.

Cluster Network

- OSD Service communicates on this network

- Replication

- Self-balancing

- Self healing

- Heartbeat check

Bonding

45Drives Recommends bonded interfaces for the Public and Cluster Networks. To achieve this, Nodes should have 2 Dual Port NIC Cards, bonding the top port on each Card for the Public Network, and the bottom two ports on each card for the cluster Network. This is to protect against controller failure.

Each Interface should have its own IP

Bonding Mode Recommended is LACP

Other methods include Active backup, and Adaptive Load Balancing.