Table of Contents

Scope/Description

This article will walk through how to add “hba” and “port” buckets to an existing CRUSH map.

These CRUSH buckets then can be used as failure domains in a CRUSH rule.

e.g You want to create a ceph storage pool that will distribute objects or objects chunks across OSDs grouped by the HBA or card they attached to rather than at the host level.

Prerequisites

- An existing ceph cluster built with 45Drives Storinators. (15,30,45,60)

- When using ceph-ansible-45d to build the cluster, this process is to be done after running the “core.yml” playbook BEFORE any pools are created.

- Non 45Drives brand servers are not supported in the process outlined below.

- Storinator Hybrid Servers are not currently supported in the process outlined below.

- Storinator “Base” AV15s are not currently supported in the process outlined below.

- OSD nodes must have the client.admin keyring present. They can be removed after the process is complete if necessary.

- If “ceph -s” errors on each OSD node, you need the client.admin keyring copied over from a mon node.

- The below steps are to be done on each storinator OSD node in the cluster.

Steps

- Remote into one of the OSD nodes

ssh root@lab-ceph1 [root@lab-ceph1 ~]#

- Download script

[root@lab-ceph1 ~]# curl -LO https://raw.githubusercontent.com/45Drives/tools/master/opt/tools/modify-crush-map.sh

- Run “modify-crush-map.sh”

- You must specify how many HBA cards are in the system. For example an Q30:

[root@lab-ceph1 ~]# sh modify-crush-map.sh -H 2

- Repeat these steps for each OSD node in the cluster

Verification

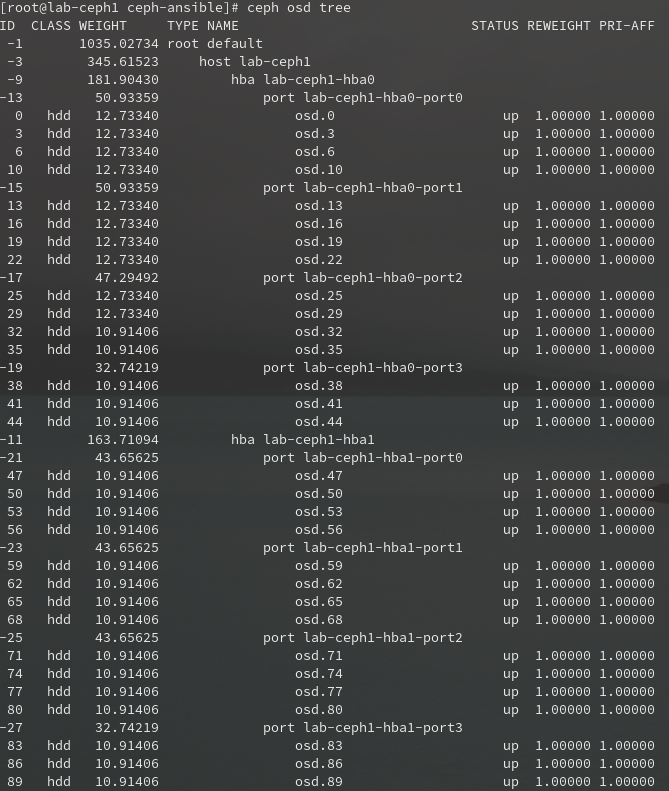

- Verify that the hba, port and OSD are created and moved by observing “ceph osd tree”

Troubleshooting

- If the script is terminated early or by accident, it is safe to just run again. It will not create or move and buckets or OSDs that are already in their intended location.

- Script Errors:

- error: Dependancy ‘bc’ required. yum/dnf install bc

- “bc” is required install with the correct package manager. yum (el7), dnf (el8), apt (deb/ubntu)

-

error: This is not a ceph-osd node

-

The node the script was run on do not have the ceph-osd packages installed, therefore are not a OSD node in a ceph cluster

-

- [errno 13] error connecting to the cluster

- Error connecting to ceph cluster. This likely indicates the admin keyring is not present in the /etc/ceph directory. Copy it over from a monitor server.

- Error initializing cluster client: ObjectNotFound(‘error calling conf_read_file’,)

- Error reading ceph config file. This likely indicates the ceph.conf file is not present in the /etc/ceph directory. Copy it over from a monitor server.

-

error: Storage pools are present

- Storage pools are present, therefore it is not safe to modify the crush map. This script is designed to be run right after cluster creation before any pools or data is created on thecluster.

- error: drive aliasing is not configured

- The file /etc/vdev_id.conf does is present on system. Therefore device aliasing is not configured. Run dmap to configure device aliasing

- error: Dependancy ‘bc’ required. yum/dnf install bc

Views: 802