KB450306 - Resolving Clock Skew Issues on PetaSAN

Posted on April 26, 2021 by Archie Blanchard

| 45Drives Knowledge Base |

KB450306 - Resolving Clock Skew Issues on PetaSAN https://knowledgebase.45drives.com/kb/kb450306-resolving-clock-skew-issues-on-petasan/ |

This article will cover resolving issues with clock skew on PetaSAN. These issues are often displayed on the dashboard and can be referenced in the ceph.log file

cat /var/log/ceph/ceph.log | grep -i clock

If there are entries mentioning clock skew, continue with the rest of this article.

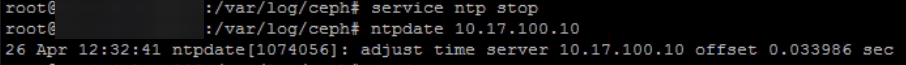

service ntp stop ntpdate

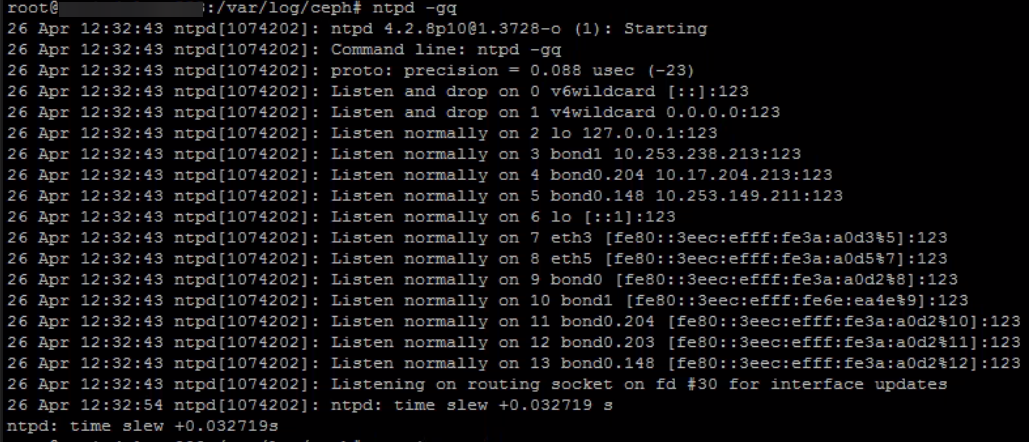

ntpd -gq

service ntp start systemctl restart ceph-mon@

![]()

Run date on each server. The times should be synced. Keep an eye on the cluster to see if clock skew occurs again, using the ceph.log file to assist with monitoring.